News & Views: What proportion of OA is Diamond OA? Can AI help us find out?

Overview

A recent post on the Open Café listserv posed a question about the true extent of fee-free open access publishing, but it noted the incomplete coverage of the data cited. We have more comprehensive data, but just as we started our analysis, DeepSeek’s release sent markets into turmoil. The stage was set for a timely experiment. We first answer the question using our data. Then we see how the AI did.

Background

What proportion of open access is not paid for by APCs? In discussing this, a recent Open Café listserv post cited studies by Walt Crawford – a librarian, well-known in the academic library and OA communities for his analysis of open access. He has paid particular attention to “diamond” OA journals, which charge neither readers nor authors. His studies are based on data from the Directory of Open Access journals (DOAJ). Excellent though both sources may be – and, full disclosure, we contribute to the DOAJ – the DOAJ’s remit covers only fully OA (“gold”) journals.

As listserv founder Rick Anderson noted, “By counting only articles published in DOAJ-listed journals, Crawford’s studies radically _undercount_ the number of APC-funded OA articles published – because DOAJ does not list hybrid journals, which always charge an APC for OA and which produce a lot of genuinely OA articles (though exactly how many, no one knows).”

Using our data

Actually, we do know … or at least have some fair estimates of hybrid OA. Our data allows us to determine the share of open access output in APC-free journals, as follows.

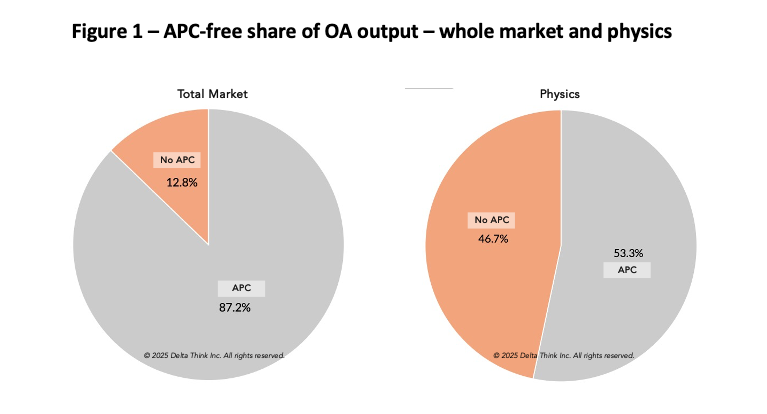

Sources: Publishers' websites, OpenAlex, DOAJ, Delta Think analysis. © 2025 Delta Think Inc. All rights reserved.

The charts analyze shares of open access articles published in both fully OA and hybrid journals.

- They show the split between output in APC-free journals (“No APC” in the orange) and in journals where APCs were charged (“APC” in the grey).

- Journals count as APC-free if they were free of APCs in the year of publication.

- The data cover the five years 2018-2023 (consistent with the Open Café post).

- Around 13% of all OA output in our sample is APC-free (left-hand pie).

- As we often discuss, individual publisher’s experiences may vary. The right-hand pie shows the subset of output for Physics. Note how the APC-free share rises to almost 47% in this case.

- If we choose a slightly earlier timeframe – which will become relevant below – we see very little change. Over 2018-2022, there was very little change in the numbers.

- Our data allows further analysis, for example by over 200 subjects, or comparing society titles with others. Please get in touch to find out more.

Our estimate of a 13% APC-free share of the total market compares with around 33% noted in the Open Café post, citing Walt Crawford’s data.

What about AI?

If we wanted to use public sources, we would consider this to be a classic web research problem. This should suit generative AI (GenAI). ChatGPT is arguably the most well-known example of this technology. With disruptor DeepSeek grabbing headlines recently, it seemed opportune to compare both with the benchmarks from our own data. We can also test DeepSeek’s claims to deliver an equivalent level of performance to ChatGPT.

The actual question

Users of GenAI tools rapidly discover that the phraseology and nuances of questions asked – aka the prompt – are vital. The more detail and direction you give them, the better. We used:

what proportion of open access is diamond open access? list numbers of articles

and share for each of the last 5 years

Some prompts can run to paragraphs. But we wanted to test the AI’s ability to process a natural sounding and simple question, to interpret the term Diamond, and to see if it would spot the inclusion of fully OA and hybrid. We needed to direct it to the specifics of our required results. The 5-year period was mentioned in the Open Café post. We chose a “one shot” prompt (not asking the model to refine its first answer), as we wanted to see how our virtual research assistant would perform without further direction.

The answer

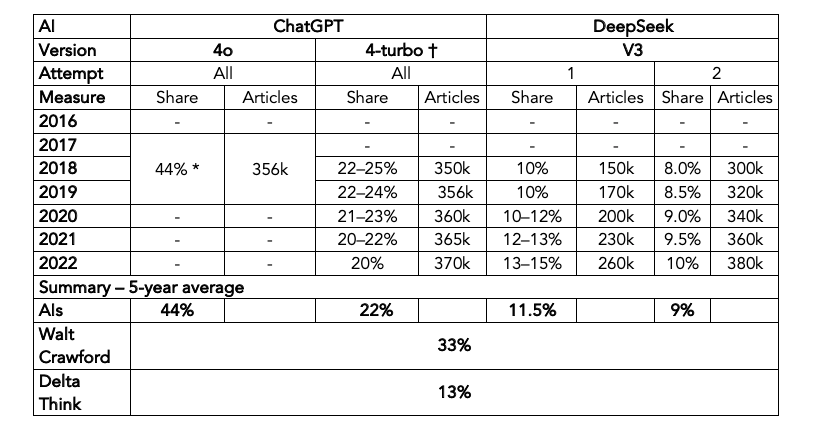

The table below shows the results.

*ChatGPT 4o did not give the answer directly. Instead, it gave Diamond OA & APC-funded OA as separate shares of all output, so we needed to calculate Diamond share of OA.

†ChatGPT 4-turbo with “Reasoning” switched on.

The table above summarizes the AIs’ answers.

- The technology continues to evolve, so there are different versions of it – different models – available. We see this for ChatGPT, where different versions of the same AI give different responses.

- A particular model may give different replies if you ask the same question more than once. ChatGPT seemed stable, but DeepSeek exhibited this behavior, as indicated in the “Attempt” row.

- ChatGPT 4-turbo has a “Reason” feature. When enabled, this shows its method (reasoning) and then appears to return more verbose results. The annual figures we cited result from the “Reason” mode. Without it, it returned similar numbers to ChatGPT 4o.

- Looking at share of OA output, there’s a big variation between ChatGPT and DeepSeek. There’s a smaller variation between the two DeepSeek attempts. DeepSeek did very well and got close to our estimates.

- The DeepSeek attempts showed big variations in the article numbers. DeepSeek attempt 2 returned similar article numbers to ChatGPT 4-turbo, but the respective shares were roughly 2.5x different.

- The models were only able to show figures up to 2022.

A research assistant’s ability to explain their results is important, and so we assessed the models on their communication ability. In practice both research assistants and AI can be asked to refine their work. But for the purposes of this analysis, we used a one-shot prompt.

- The word count of replies varied. Chat GPT-4o had single-paragraph, single-number brevity. The others mixed bullets, prose, and tables.

- Only one model added a hyperlink to its source(s), noted as follows.

- ChatGPT-4o cited encyclo.ouvrirlascience.fr. It was the one model to include a link.

- ChatGPT 4-turbo volunteered sections describing definitions, included headline numbers from some sources, tabulated yearly estimates, discussions comparing results from different sources, caveats on data limitations, and a summary. It cited Wikipedia as its source. It noted that its 5 years of estimates were based on data from 2017-2019, but it’s unclear whether it calculated them or was citing some language it found.

- DeepSeek volunteered brief discussions about definitions, trends, annual estimates, and key sources. The sources seemed to be directions for further reading, including the OA Diamond Journals Study (2021) by cOAlition S and OPERAS, which it considered to provide the most comprehensive view, the DOAJ, and Web of Science/Scopus (noting they underrepresent Diamond OA journals, as many are smaller or regionally focused).

- The verbose models attached general caveats to the data, but none picked up on the subtleties of defining Diamond. If a journal has only one APC-free year, say as an introductory discount, does it really count as a true Diamond or sponsored journal?

Conclusion

In working with and assessing GenAI models, it is important to set expectations correctly. They are more like an inexperienced intern than a seasoned researcher. Prompts need to be refined, several attempts made, and human judgement exercised over the results.

DeepSeek’s results were surprisingly good. It undershot our estimate by a few percentage points. ChatGPT overestimated considerably – perhaps reflecting its use of the problematic sources noted in the original listserv post. The additional material selected by the models was useful in explaining the concepts and drivers behind that data. The differences between our samples and those the models cite explain some of the variance in the data. But this doesn’t explain some of the big differences – e.g. same #s articles, but different shares or vice versa, with 2.5x variance.

Perhaps the GenAI models, in their current forms, are not ideally suited this sort of numerically focused research. However, they appear to be progressing in the right direction. (Note: We did not attempt this query on either of the Deep Research models, currently offered by OpenAI and Google Gemini.)

For fun, we tail-ended our assessment by asking each of our AIs “what's the answer to life, the universe and everything?” ChatGPT went to the Hitchhiker’s Guide to the Galaxy answer of 42. DeepSeek was more expansive, noting how the question encompassed broader philosophic endeavors. However, a light-weight offline version of DeepSeek that we experimented with gave perhaps the best reply. It concluded that, while 42 is a clever punchline,

“…it also reflects on the human tendency to search for significance where there might not be one.”

Fair point.

---

This article is © 2025 Delta Think, Inc. It is published under a Creative Commons Attribution-NonCommercial 4.0 International License. Please do get in touch if you want to use it in other contexts – we’re usually pretty accommodating.

---

TOP HEADLINES

What Gets Missed in the Discourse on Transformative Agreements – February 12, 2025

"Transformative agreements have a crucial role to play in the transition to open access, and while they may not fix structural problems, they are a reflection of the dynamic publishing landscape and the diverse needs of the academic community."

Serbia Adopts Open Science Platform 2.0 – February 4, 2025

"The Ministry of Science, Technological Development and Innovation of Serbia has adopted a new national open science (OS) policy – the Open Science Platform 2.0 – that applies to all Serbian publicly-funded research projects and programmes. EIFL welcomes the adoption of the policy, which significantly expands the scope of OS efforts in Serbia, and updates the country’s first national OS policy, which was adopted in 2018."

cOAlition S to sunset the Journal Comparison Service in April 2025 – February 3, 2025

"cOAlition S announces it will discontinue the Journal Comparison Service (JCS), effective from 30th April 2025...The JCS was launched in September 2022 to support the Plan S principle that Open Access fees should be transparent and commensurate with the publishers’ services. It was developed following detailed consultations with librarians, publishers, legal experts, and software developers with the aim to shed light on open access publishing fees and services."

Announcing a New Report on Open Educational Resources – January 22, 2025

"In the fall of 2023 we announced the launch of a new research project, funded by the William and Flora Hewlett Foundation, designed to assess the impact and implementation of open educational resource (OER) initiatives at public institutions of higher education. Today, we are publishing the resulting report, based on an initial literature review and interviews with OER leaders in four US states."

European Diamond Capacity Hub launched to strengthen Diamond Open Access publishing in Europe – January 21, 2025

"The European Diamond Capacity Hub (EDCH), a pioneering initiative to advance Diamond Open Access publishing across Europe, was officially launched on 15th January 2025, in Madrid. OPERAS, the research infrastructure for open scholarly communication in the social sciences and humanities, will serve as the fiscal host of the European Diamond Capacity Hub."

OA JOURNAL LAUNCHES

All EMS Press journals open access in 2025 following another successful Subscribe To Open round - February 5, 2025

"EMS Press publishes all journals open access in 2025 for the second consecutive year, following a successful Subscribe To Open (S2O) round...all 22 journals in our Subscribe To Open (S2O) programme will be published as open access for the 2025 subscription period."